Project update-- I'm making lots of progress on the SpriteMusic sequencer and I think a demo video will be coming shortly. Then after the video release and a little more feature additions/cleanup, I will be looking for test users. Most of my development time since last posting has been spent on the audio engine and audio/video sync... even though I am still working at the 'alpha/prototype' stage it is important to me to have the audio quality be good enough to make something cool with.

After testing with both CSoundo and Pure Date via OSC, I have switched over to the 'Minim beta' sound library for various reasons, which has the benefit of being fully integrated with Processing. Currently the audio engine is based on 5 bandlimited-wavetable oscillators, each switchable between pulse wave, triangle, noise, saw, sine and parabolic waveforms. Each instrument allows 60Hz modulation of (at least) Amplitude, Pitch (fine and arpeggio), Waveform (for PWM and wave-sequencing), and Pan.

Eventually there will be some form of user-drawable waves, and I may just throw in some 'regular sample playback' channels as well, for users who want to make 'chiptune plus edm drums' style tracks.

(Edit: I forgot to mention... I have been heavily tempted also to bump up the audio control-rate to 240Hz. Not *just* cause I am inspired by all the Pulsar talk {Hi, Neil Baldwin!} but also because after re-reading a bunch of NES APU docs I realize that it has more capabilities than I thought for modulating things at that rate. On the other hand there is something magical in the 30/60Hz range where both the eye and the ear can detect changes, leading to the synaesthetic feeling I strive for. Also I'm reluctant to decouple the nice 'one audio moment per card' paradigm. hmm.)

I would like to have choices for different audio engines to make this more widely useful/appealing: for instance I will probably look into using Blargg's audio libraries for accurate NES-music playback. And output to hardware synths is still on my mind as well.

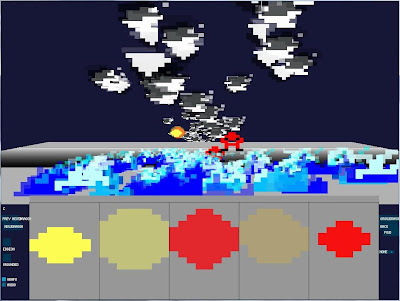

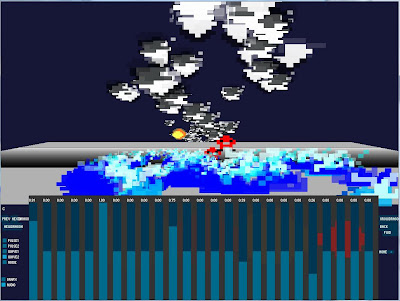

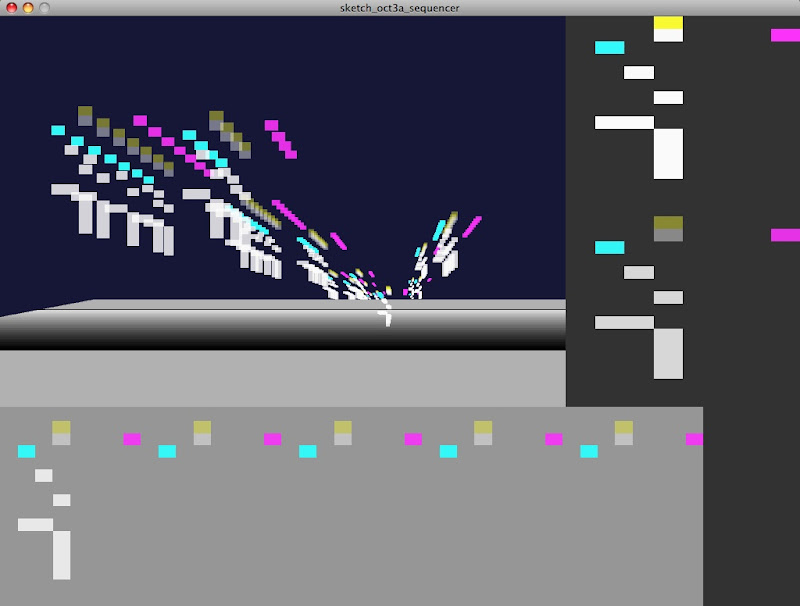

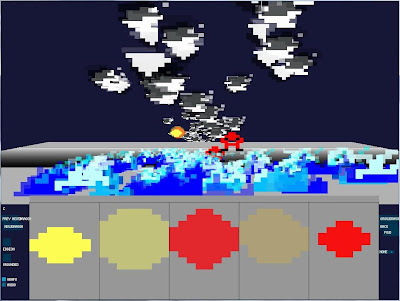

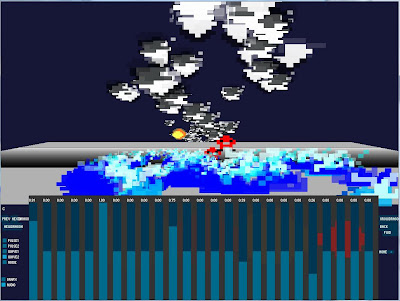

Here are a couple of new screens to look at, picked from nice moments in testing. Actually I've had these for awhile, just didn't get around to posting. It does seem a little funny to keep posting screenshots when the audio and the animation is such an important part; so that's the reason I hope to be posting videos any day now. (Well, realistically, within a week is certainly possible.) And once I get out some videos I will endeavor to explain many more details of how you use the software.

1- This shows a newer version of the interface, and demonstrates multiple types of 'dragon' onscreen. It also demonstrates graphics which 'look like something', rather than being abstract. It was done by a test user (family member).

2- In the bottom panel, you can see the audio adjustment mode, where parameters are set on a 'per card' basis.

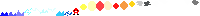

3- When saving files, the graphical part (sprite sheet) is saved to PNG file, so it is fun to look at them and potentially you could edit further with Photoshop, etc.

4- An older test shot, this is an abstract thing I made; it looked cool in motion.

The software seems to be running fine on both my Mac and Windows test machines. It's possible to slowdown the graphical framerate with too many sprites on screen, but on the other hand I am only still using OpenGL in 'direct mode', i.e. not really taking advantage of the graphics cards. Therefore I guess that lots of further optimization is possible.

One thing to clarify is that the final version will have many options for automatically linking audio parameters to graphical adjustments. For instance, pitch >> height, pan >> xpos, scale or chord >> color, perhaps volume >> opacity, etc. But a lot of that linkage (and for now, most of it) is just 'done manually', so basically you can explore how you would like to link different sounds/modulations to different images. A deep hope I have for the project is that once I release this we can all have some really interesting conversations about 'how does one visualize music'.